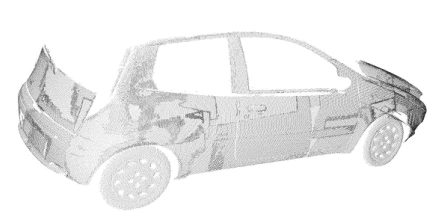

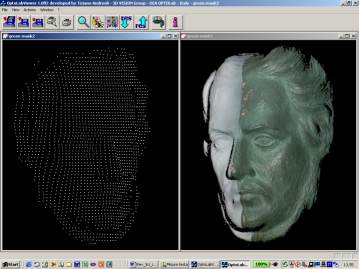

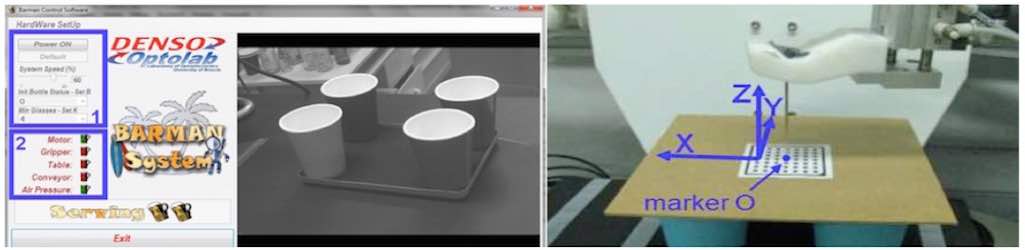

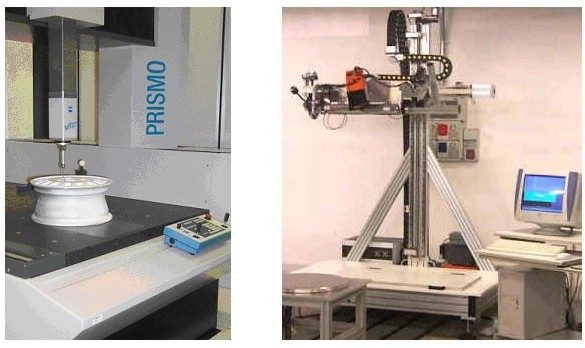

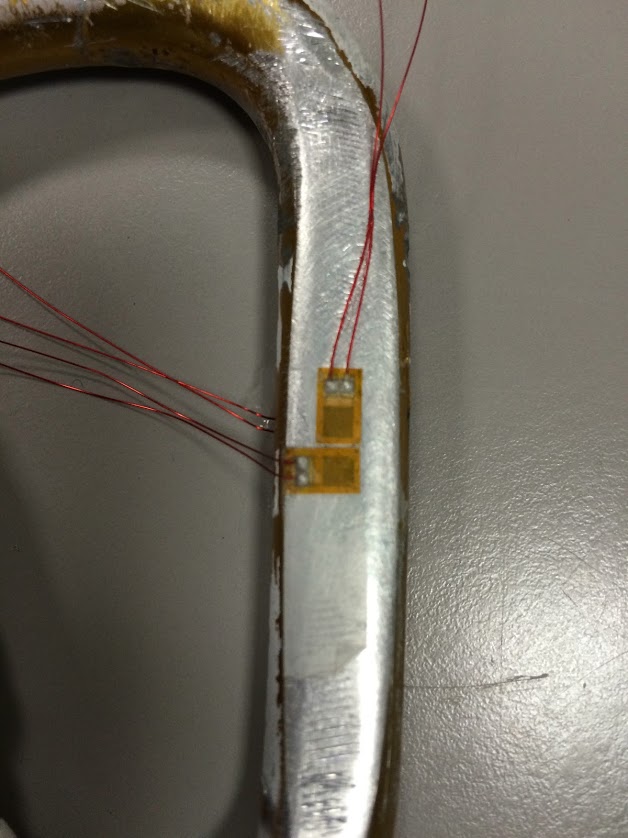

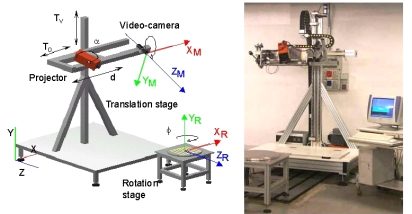

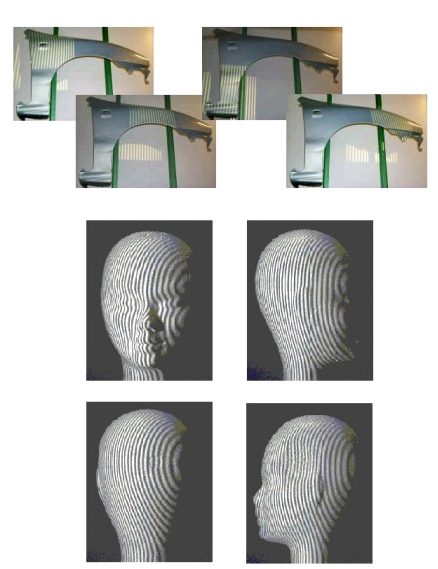

As shown in Fig. 1, the system is composed by the optical head and two moving stages. The optical head exploits active stereo vision. The projection device, LCD based, projects on the target object bi-dimensional patterns of non-coherent light suitable to implement the SGM, PSM GCM and GCPS techniques for light coding. Each point cloud is expressed in the reference system of the optical head (XM, YM, ZM).

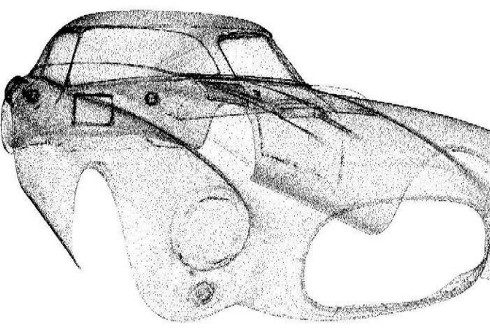

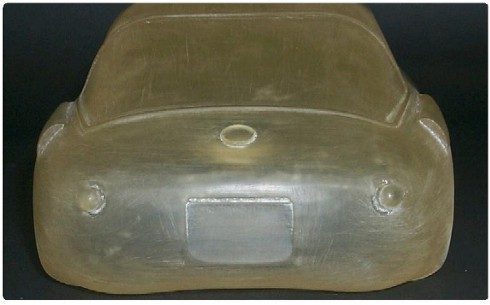

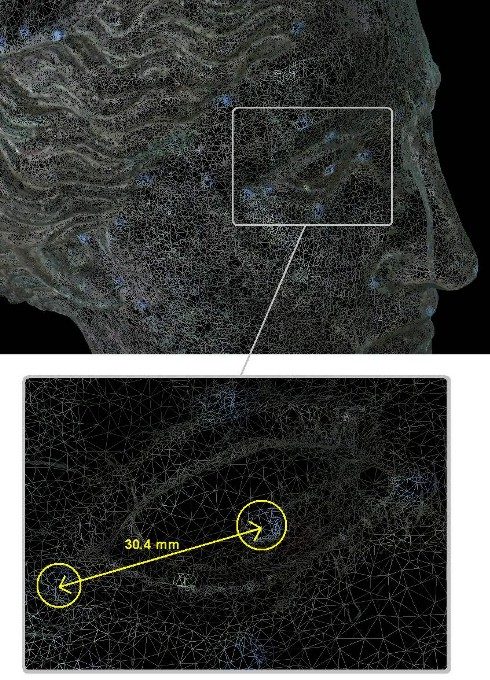

The optical head is mounted on a moving stage, allowing its translation along the vertical and the horizontal directions (Tv, To); as shown in Fig. 2, areas large up to two square meters can be scanned and large surfaces can be measured by acquiring and aligning a number of separate patches. The objects presenting circular symmetry can be placed on a rotation stage: it enables the acquisition of views at different values of the rotation angle.

The system is equipped with stepped motors, to fully control the rotation and the translation of the optical head. Each view is aligned by means of rototraslation matrices in the coordinate system (XR, YR, ZR), centerd on the rotation stage. The sequence of acquisition of the range images is decided on the basis of the dimension and the shape of the object, and is performed automatically.

Fast calibration is provided, both for the optical head and for the rotation stage.

System Performances

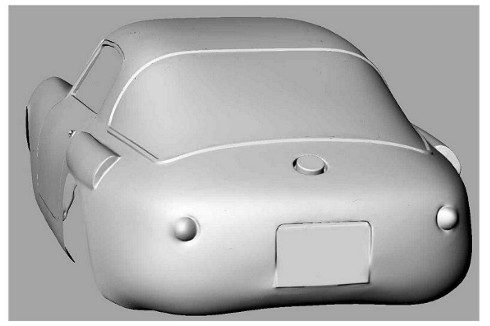

- Optical, non contact digitization based on active triangulation and non-coherent light projection;

- Adjustable measuring area, from 100 x 100 mm to 400 x 400 mm (single view);

- Measuring error from 0.07 mm to 0.2 mm, scaled with the Field of View;

- Automatic scanning, alignment and merging, mechanically controlled;

- Color/texture acquisition;

- PC based system, with 333 Pentium II, Windows 98 and Visual C++ software

- Import/Export formats for CAD, rapid prototyping, 3D-viewers, graphic platforms;

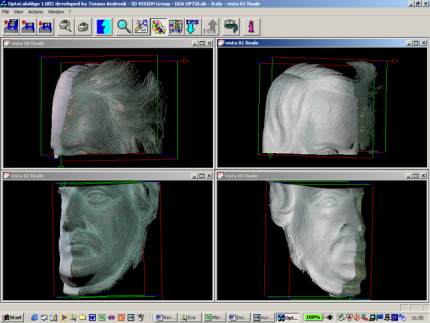

- Software interface for the handling, the processing and the visualization of both partial and complete point clouds.