The projects presented by the students to complete the exam are described here. Every group was composed of two members, randomly selected.

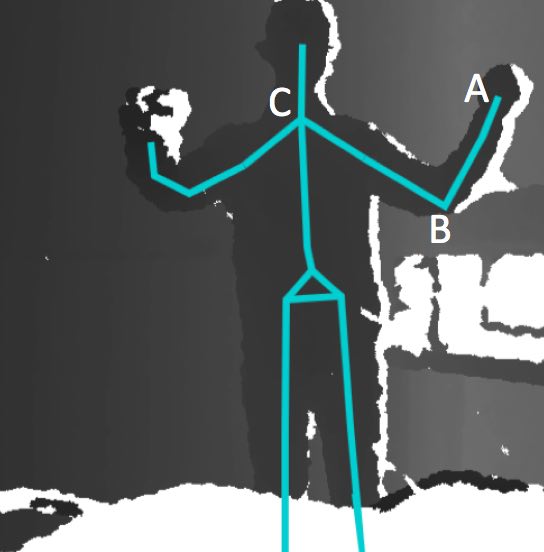

Group 1: Extrinsic calibration of 2 Kinects using both a sphere-based calibration and a skeletonization-based calibration

The group task was to compare the extrinsic calibration results obtained both from a calibration made using a green sphere and a custom algorithm developed by University of Trento, and a calibration obtained by a skeletonization algorithm developed by our group.

Group 2: Intrinsic calibration evaluation

The group task was to empirically determine the best way to perform an intrinsic calibration of Kinect v2 cameras using a chessboard target (i. e. how many acquisitions? Distances? Inclinations?). Then, they calibrated four different Kinect v2 and analyzed the results of the calibration for each camera.

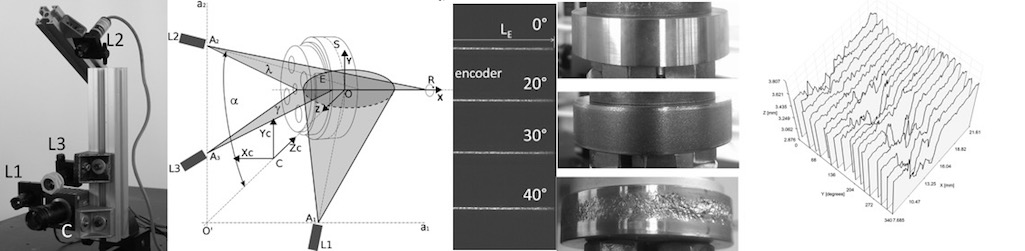

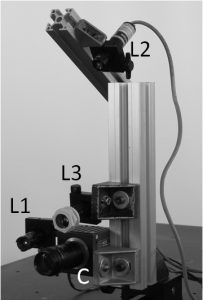

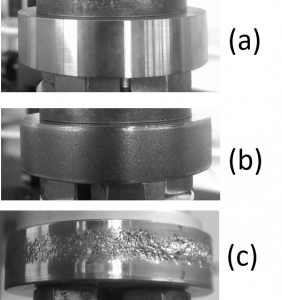

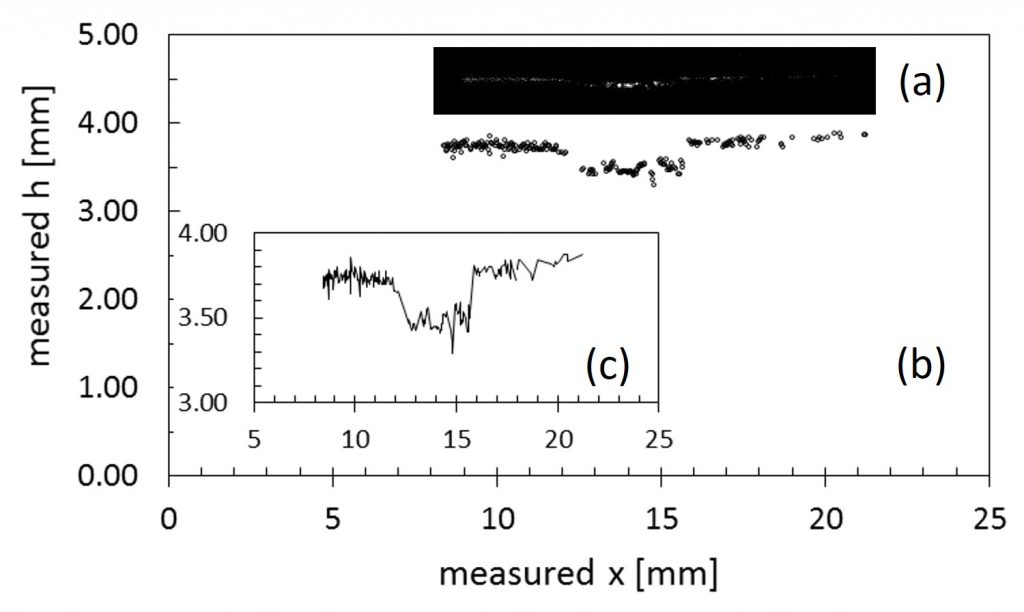

Group 3: Evaluation of a trabecular structure point cloud acquisition (1)

The group task was to compare two different acquisitions of a small trabecular structure 3D printed in Titanium, obtained (i) from the 3D digitizer Vivid-910 and (ii) from the 2D/3D Profile Sensor Wenglor MLWL132.

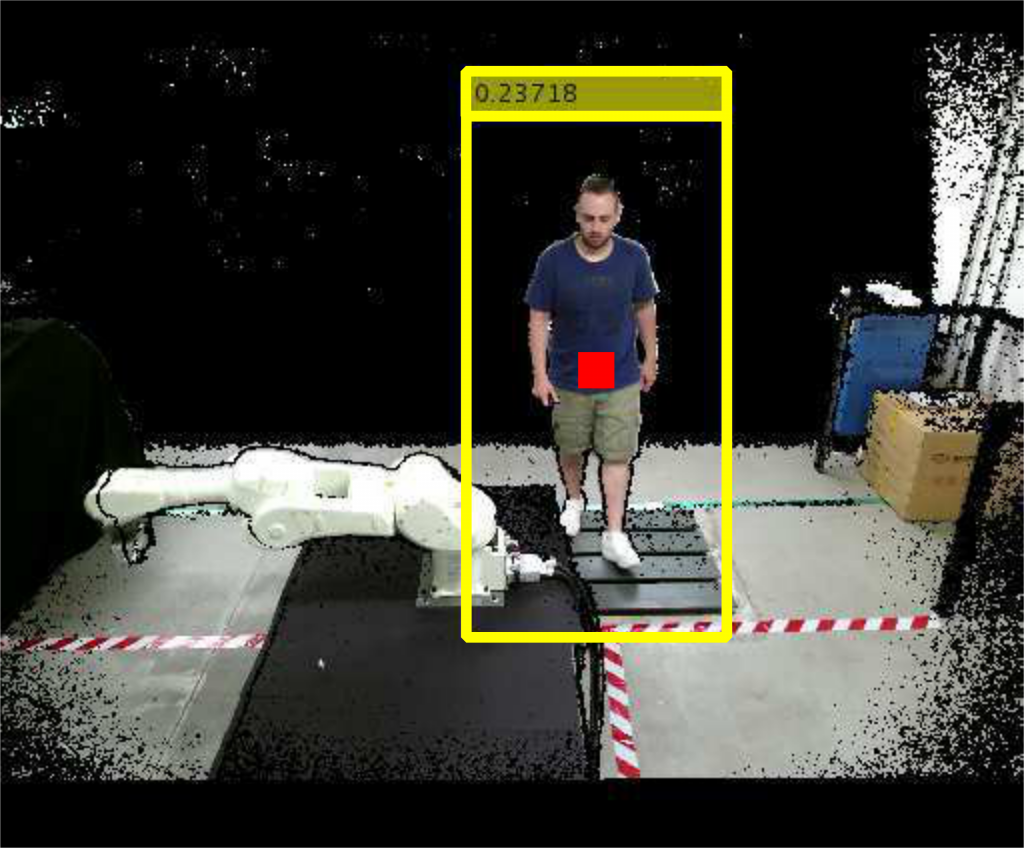

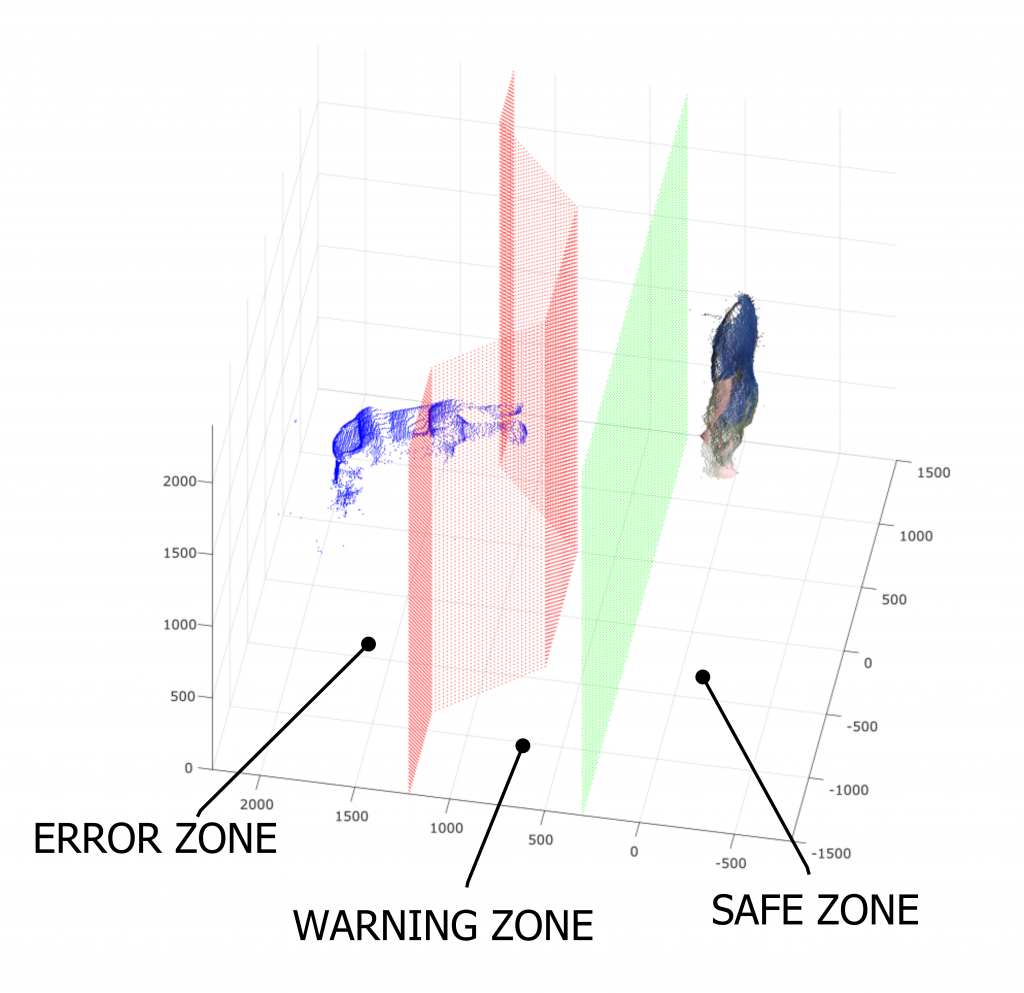

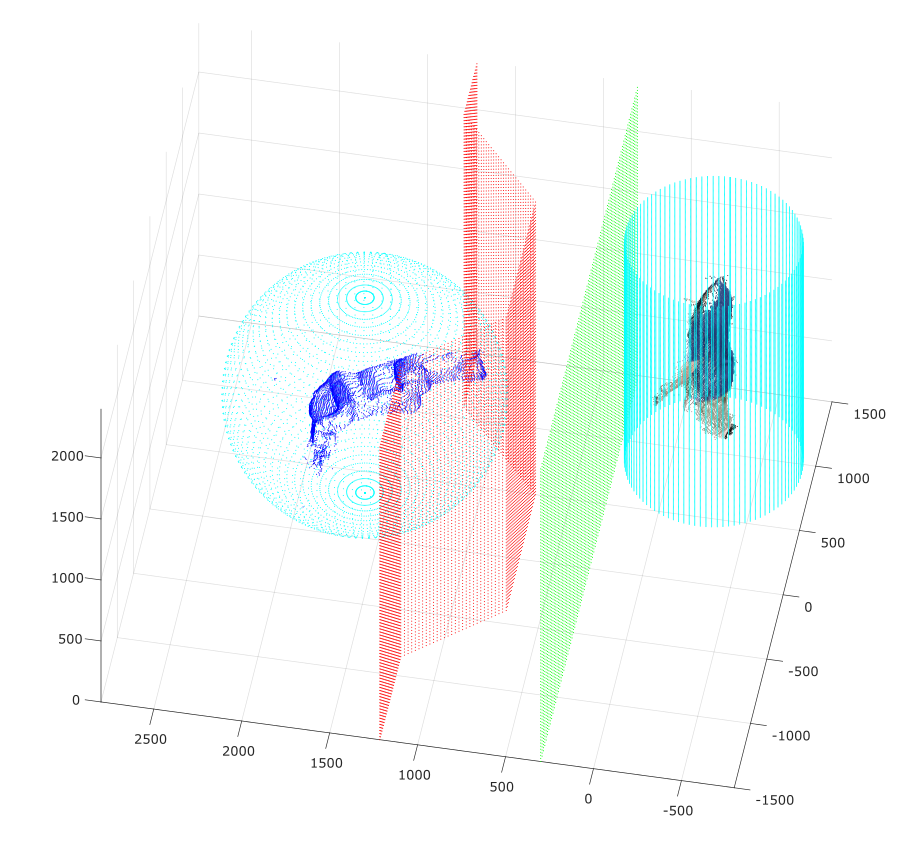

Group 4: People tracking system evaluation

The group task was to create a simple people tracking algorithm based on the 3D point cloud acquired from a Real Sense D435 camera mounted on the ceiling. The evaluation of the performances focused on how well the developed algorithm was able to track the path of the person compared to the theorethical path.

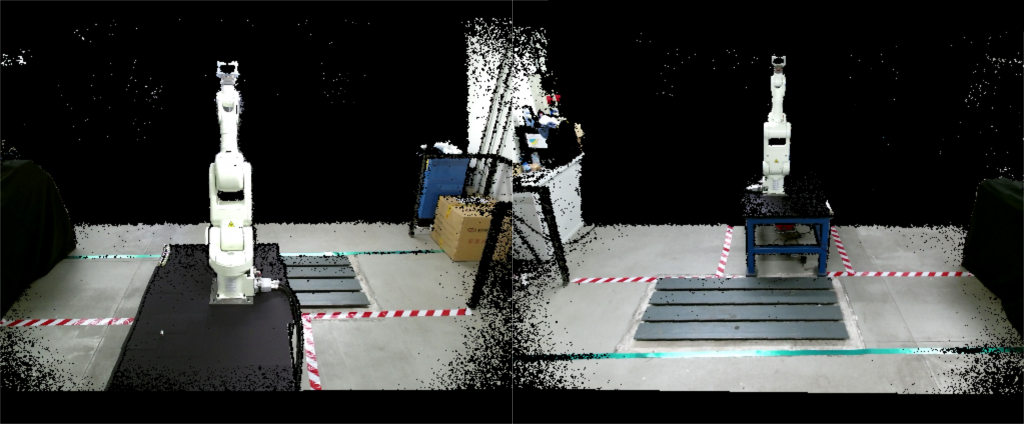

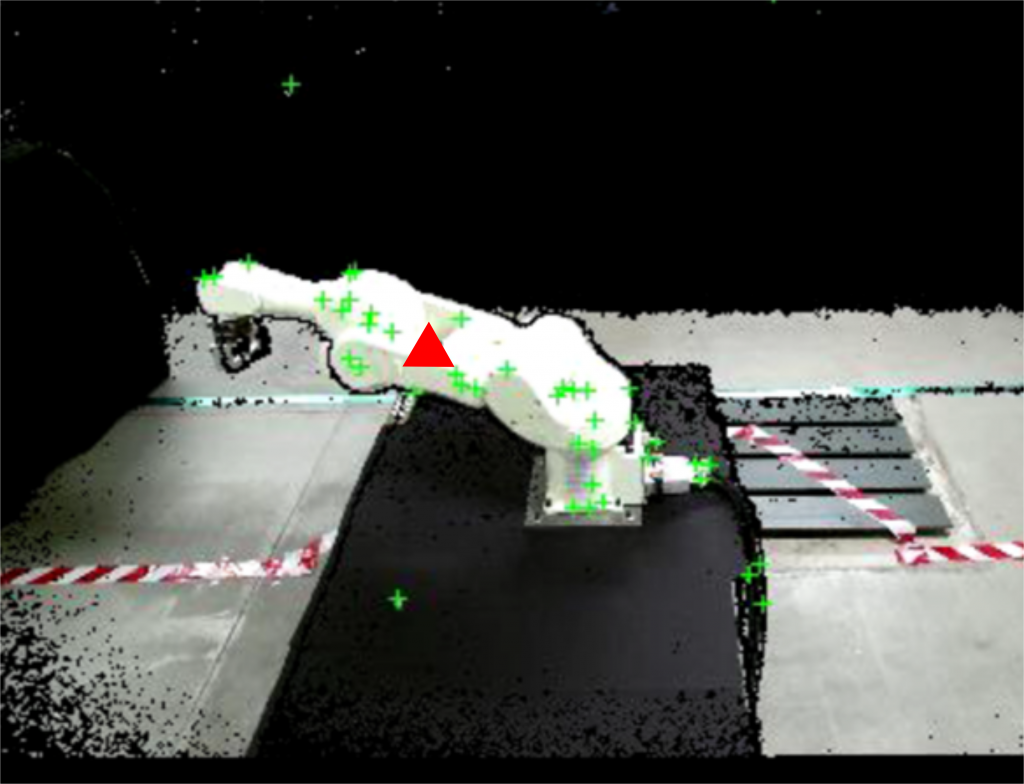

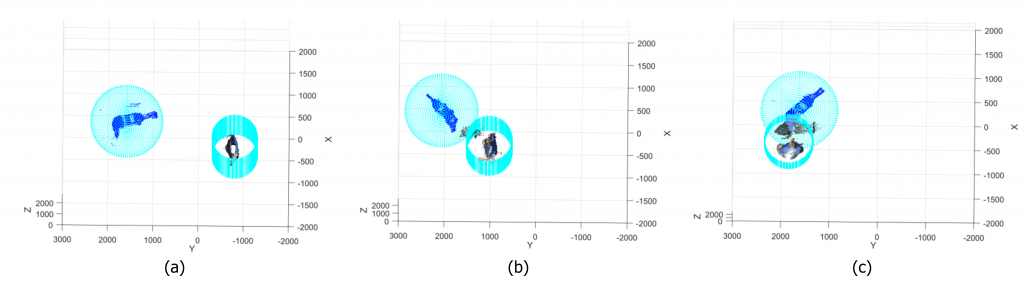

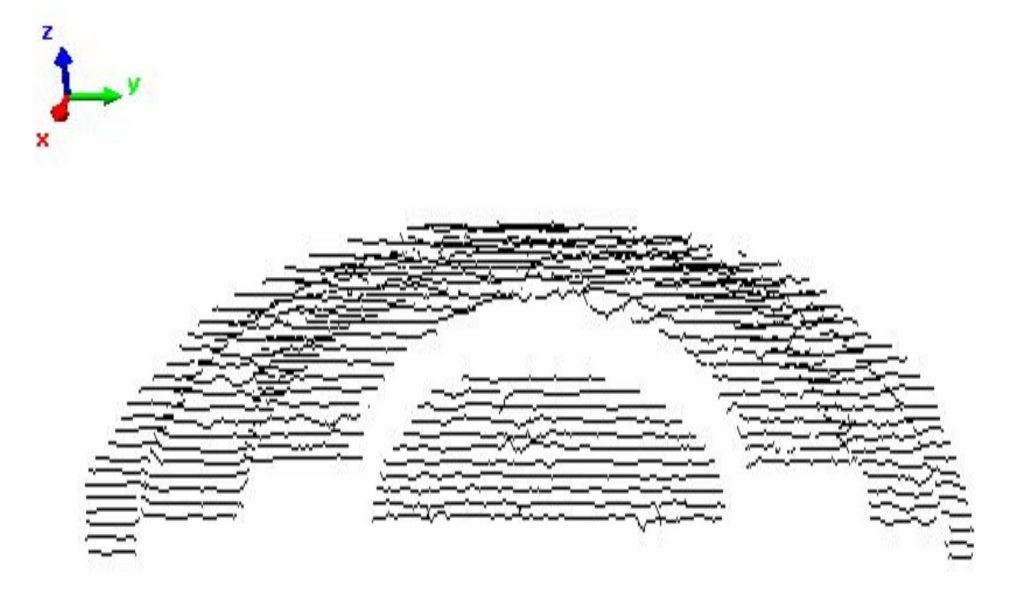

Group 5: Extrinsic calibration of 3 Kinects using a skeletonization algorithm

The group task was to analyze the result of the point cloud alignment obtained by the extrinsic calibrations performed. The group tested different configurations with 2 and 3 Kinects in different positions, used the skeletonization algorithm to obtained the rototranslation matrixes and finally analyzed the resulting alignments in PolyWorks.

Group 6: Evaluation of a trabecular structure point cloud acquisition (2)

The group task was to compare two different acquisitions of a small trabecular structure 3D printed in Titanium, obtained (i) from the 3D digitizer Vivid-910 and (ii) from the 2D/3D Profile Sensor Wenglor MLWL132. The trabecular structure used is different from the one used by Group 3.

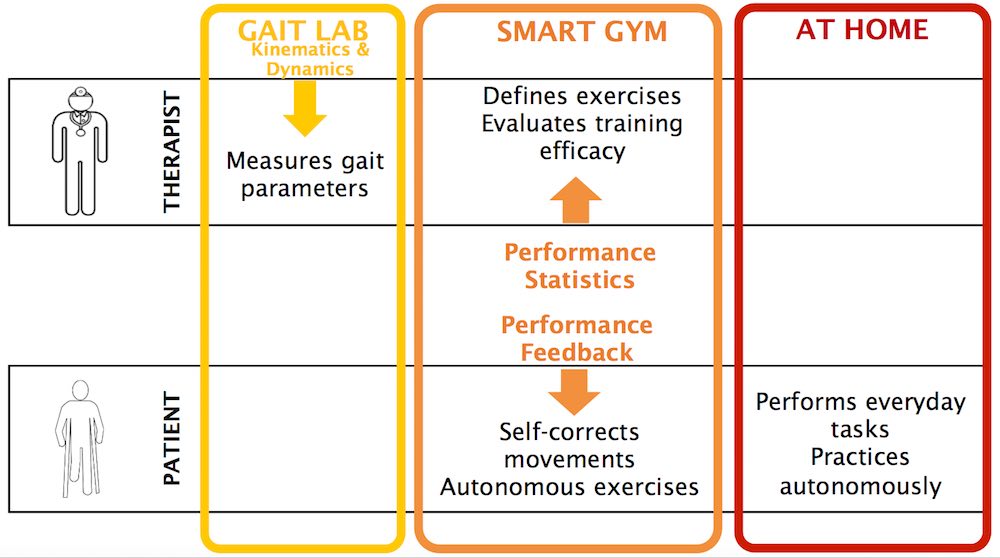

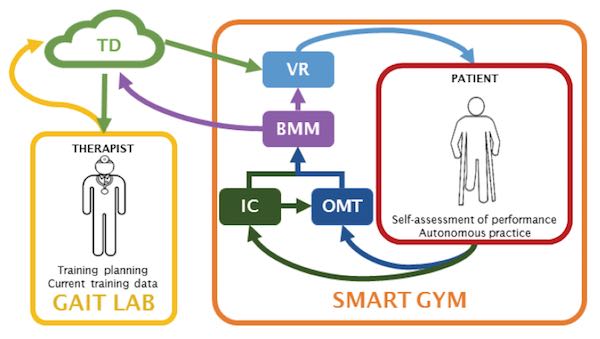

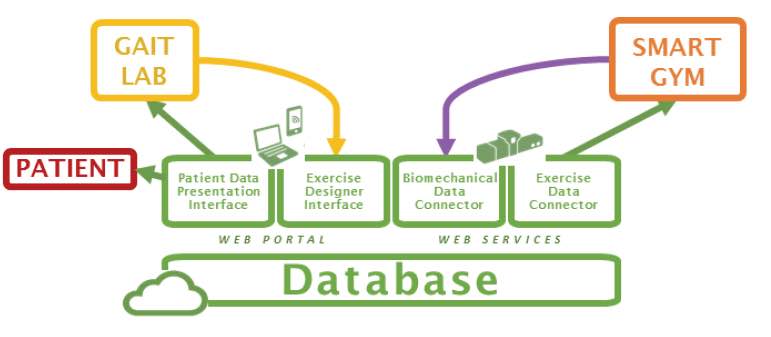

Group 7: Instrumented crutches for gait analysis results evaluation

The group task was to perform some acquisitions of a person walking with a pair of our instrumented crutches in different outdoor set ups (uphill, downhill, planar). The acquisitions have been elaborated by our software to analyze gait phases, and their task was to choose the best set up conditions and filtering options according to the results of the algorithm.