Metrology techniques based on Industrial Vision are increasingly used both in research and industry. These are contactless techniques characterized by the use of optical devices, such as RGB and Depth cameras. In particular, multi-camera systems, i. e. systems composed of several cameras, are used to carry out three-dimensional measurements of various types. In addition to mechanical measurements this type of systems are often used to monitor the movements of human subjects within a given space, as well as for the reconstruction of three-dimensional objects and shapes.

To perform an accurate measurment, multi-camera systems must be carefully calibrated. The calibration process is a fundamental concept in Computer Vision applications that involve image-based measurements. In fact, Vision System calibration is a key factor to obtain reliable performances, as it allows to find the correspondence between the workspace and the points present on the images acquired by the cameras.

A multi-camera system calibration is the process that allows to obtain the geometric parameters related to distortions, positions and orientations of each camera that constitutes the system. It is therefore necessary to identify a mathematical model that takes into account both the internal functioning of the device and the relationship between the camera and the external world.

The calibration process consists of two parts:

- The intrinsic calibration, necessary to model the individual devices in terms of focal length, coordinates of the main point and distortion coefficients of the acquired image;

- The extrinsic calibration, necessary to determine the position and orientation of each device with respect to an absolute reference system. By means of extrinsic calibration it is possible to obtain the position of the camera reference system with respect to the absolute reference system in terms of rotation and translation.

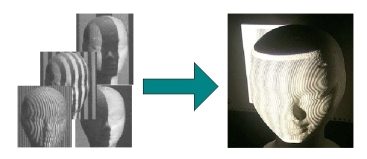

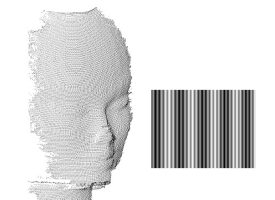

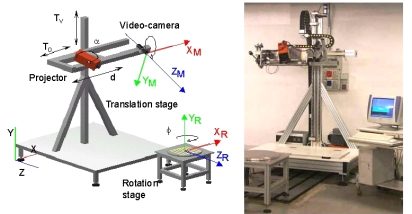

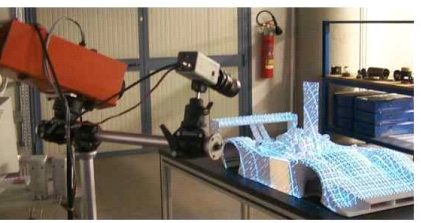

The methods based on the recognition of three-dimensional shapes are based on the geometric coherence of a 3D object positioned in the field of view (FoV) of the various cameras. Each device records only a part of the target object and then, combining each view with the portion actually recorded by the camera, the relative displacement of the sensors with respect to the object is evaluated, thus obtaining the rotation and translation of the acquisition device itself (Fig. 2).

However, both traditional calibration methods and those based on the recognition of three-dimensional shapes have the disadvantage of being very complex and computationally expensive. In fact, former methods exploit the use of a generally flat calibration target which limits the positioning of the target itself because, under certain conditions and positions, it is not possible to obtain simultaneous views. The latter methods also require to converge to a solution, hence a good initialization of the parameters of 3D recognition by the operator is needed, resulting in low reliability and limited automation.

Finally, the methods based on the recognition of the human skeleton use as targets the skeleton joints of the human positioned within the FoV of the cameras. Skeleton based methods represent therefore an evolution of the 3D shape matching methods, since the human is considered as the target object (Fig. 3).

In this thesis work, we exploited skeleton based methods to create a new calibration method which is (i) easier to use compared to known methods, since users only need to stand in front of a camera and the system will take care of everything and (ii) faster and computationally inexpensive.

STEP 1: Measurment set-up

The proposed set-up is very simple, as it is composed of a pair of Kinect v2 intrinsecally calibrated by using known procedures, a process required to correctly align the color information on top of the depth information acquired by the camera.

The cameras are placed in order to acquire the measurment area in a suitable way. After the calibration images have been taken, these are evaluated by a skeletonization algorithm based on OpenPose, which also takes into account the depth information to correctly place the skeleton in the 3D world [1]. Joints coordinates are therefore extracted for every pair of color-depth images correctly aligned both spatially and temporally.

To obtain accurate joint positions we also perform an optimization procedure afterwards to correctly place the joints on the human figure according to a minimization error procedure written in MATLAB.

Fig. 4 shows the abovementioned steps, while Fig. 5 shows a detail of the skeletonization algorithm used.

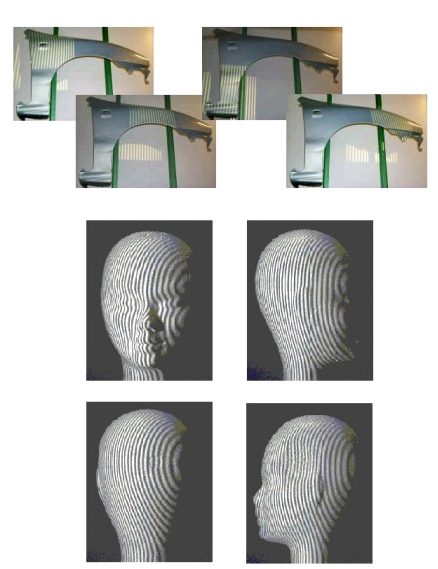

STEP 2: Validation of the proposed procedure

To validate the system we used a mannequin placed still in 3 different positions (2 m, 3.5 m, 5 m) showing both the front and the back of it to the cameras. In fact, skeletonization procedures are usually more robust when humans are in a frontal position since also the face keypoints are visible. The validation positions are shown in Fig. 6, while the joint positions calculated by the procedure are shown in Fig. 7 and 8.

STEP 3: Calibration experiments

We tested the system in 3 configurations shown in Fig. 9: (a) when the two cameras are placed in front of the operator, one next to the other; (b) when a camera is positioned in front of the operator and the other is positioned laterally with an angle between them of 90°; (c) when the two cameras are positioned with an angle of 180° between them (one in front of the other, operator in the middle).

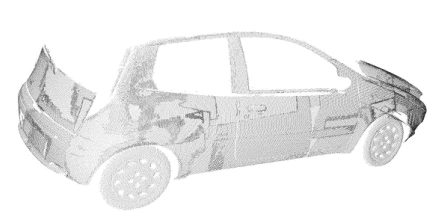

We first obtained the calibration matrix by using our procedure, hence the target used is the human subject placed in the middle of the scene (at position zero). Then, we compared the calibration matrix obtained in this way to the calibration matrix obtained from another algorithm developed by the University of Trento [2]. This algorithm is based on the recognition of a 3D known object, in this case the green sphere shown in Fig. 2.

The calibration obtained from both methods is evaluated using a 3D object, a cylinder of known shape which has been placed in 7 different positions in the scene as shown in Fig. 10. The exact same positions have been used for each configuration.

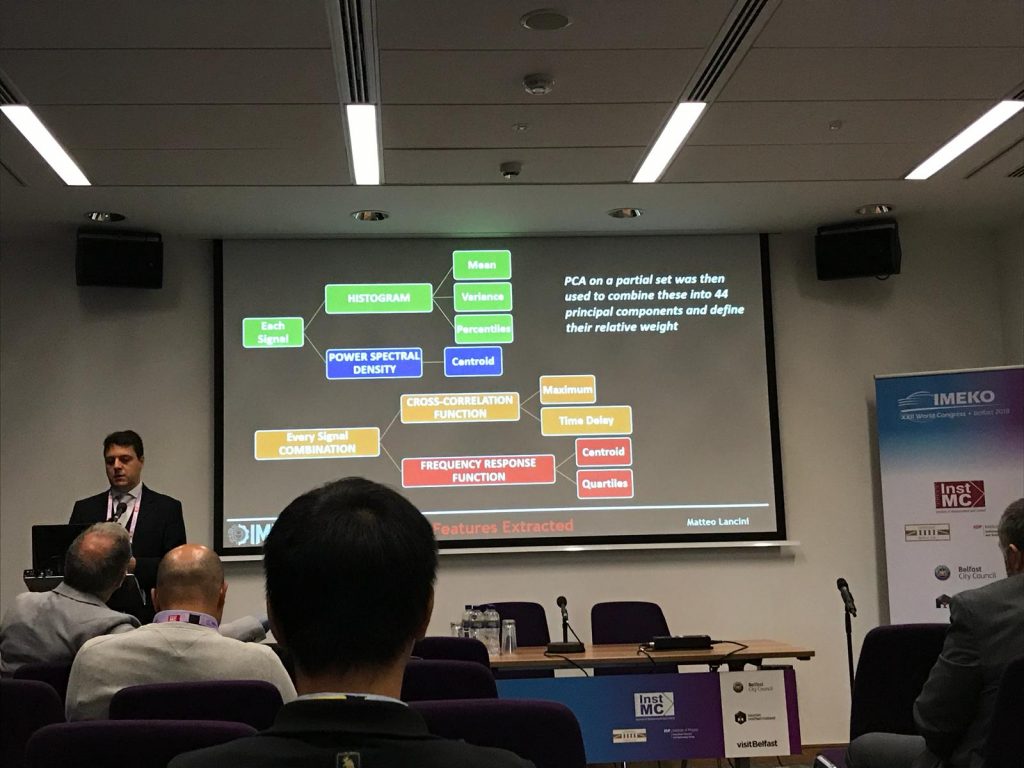

We finally compared the results aligning the point clouds obtained by using both calibration matrixes. These results have been elaborated in PolyWorks for better visualization, and are shown in the presentation below. Feel free to download it to know more about the project and to view the results!