Smart tracking systems are nowadays a necessity in different fields, especially the industrial one. A very interesting and successful open source software has been developed by the University of Padua, called OpenPTrack. The software, based on ROS (Robotic Operative System), is capable to keep track of humans in the scene, leveraging well known tracking algorithms that use point cloud 3D information, and also objects, leveraging the colour information as well.

Amazed by the capabilites of the software, we decided to study its performances further. This is the aim of this thesis project: to carefully characterize the measurment performances of OpenPTrack both of humans and objects, by using a set of Kinect v2 sensor.

Step 1: Calibration of the sensors

It is of utmost importance to correctly calibrate the sensors when performing a multi-sensor acquisition.

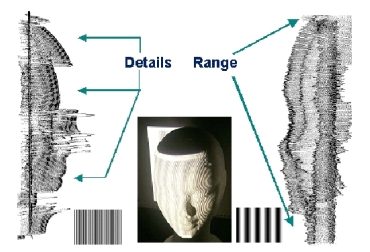

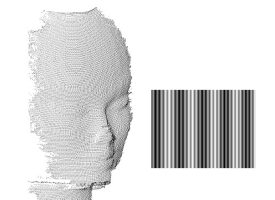

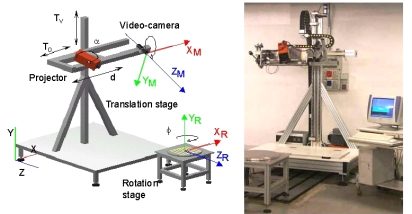

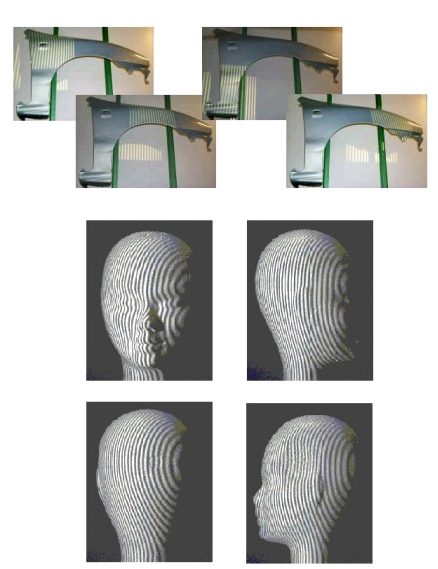

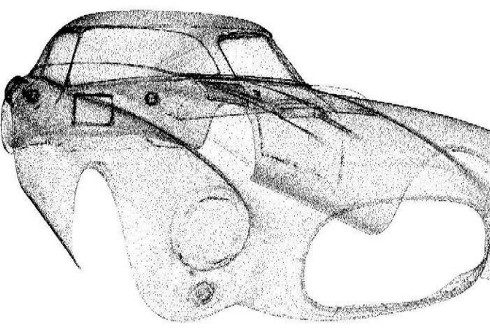

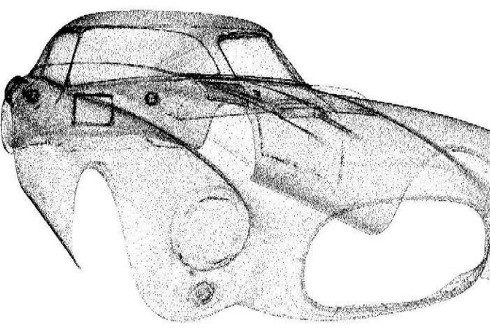

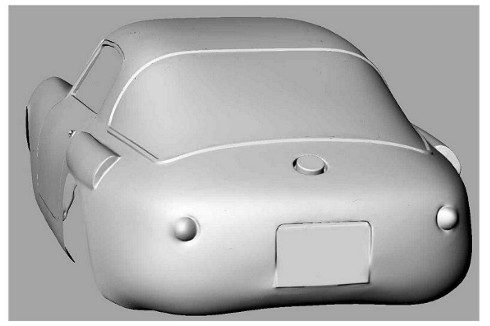

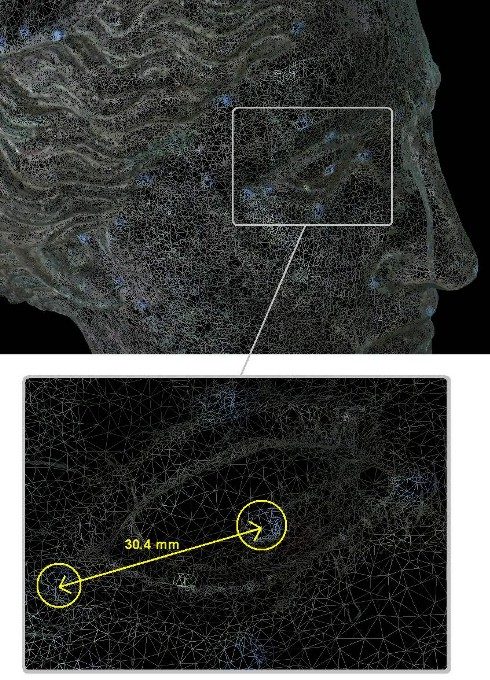

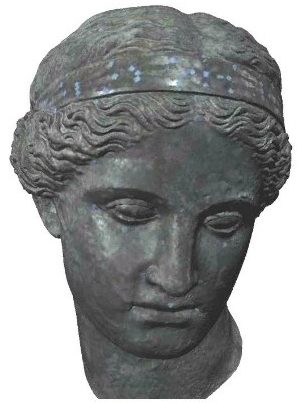

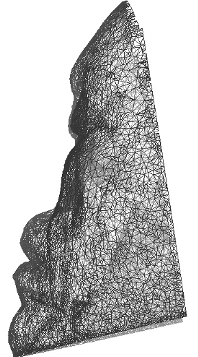

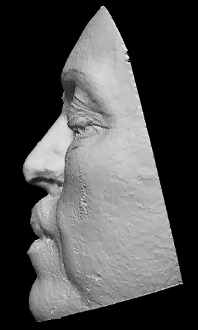

Two types of calibration are necessary: (i) the intrinsic calibration, to align the colour (or grayscale/IR like in the case of OpenPTrack) information acquired to the depth information (Fig. 1) and (ii) the extrinsic calibration, to align the different views obtained by the different cameras to a common reference system (Fig. 2).

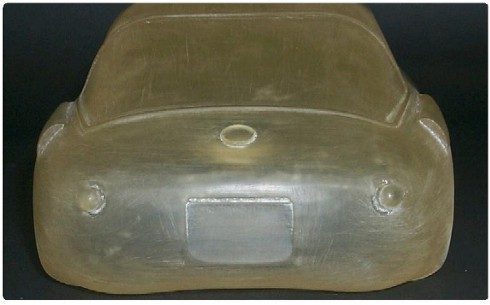

The software provides the suitable tools to perform these steps, and also provides a tool to further refine the extrinsic calibration obtained (Fig. 3). In this case, a human operator has to walk around the scene: its trajectory is then acquired by every sensor and at the end of this registration the procedure aligns the trajectories in a more precise way.

Each of these calibration processes is completely automatic and performed by the software.

Step 2: Definition of measurment area

Two Kinect v2 were used for the project, mounted on tripods and placed in order to acquire the larger FoV possible (Fig. 4). A total of 31 positions were defined in the area: these are the spots where the targets to be measured have been placed in the two experiments, in order to cover all the FoV available. Note that not every spot lies in a region acquired by both Kinects, and that there are 3 performance regions highlighted in the figure: the overall most performing one (light green) and the single camera most performing ones, where only one camera (the one that is closer) sees the target with a good performance.

Step 3: Evaluation of Human Detection Algorithms

The performances were evaluated using 4 parameters:

- MOTA (Multiple Object Tracking Accuracy), to measure if the algorithm was able to detect the human in the scene;

- MOTP (Multiple Object Tracking Precision), to measure the accuracy of the barycenter estimation relative to the human figure;

- (Ex, Ey, Ez), the mean error between the estimation of the barycenter position and the reference barycenter position known, relative to every spatial dimension (x, y, z);

- (Sx, Sy, Sz), the errors variability to measure the repetibility of the measurments for every spatial dimension (x, y, z).

Step 4: Evaluation of Object Detection algorithms

The performances were evaluated using the same parameters used for the Human Detection algorithm, but referred to the tracked object instead.

If you want to know more about the project and the results obtained, please download the master thesis below.