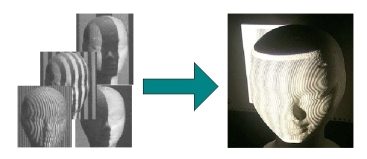

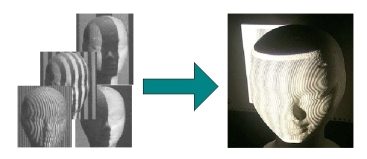

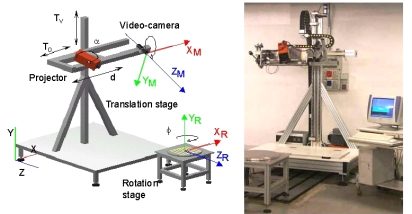

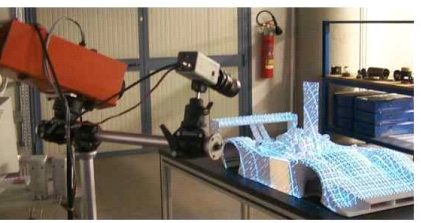

This project was developed in the context of the National Italian Project: Low-cost 3D imaging and modeling automatic system (LIMA3D). The Laboratory aim was to design, develop and perform a metrological characterization of a low-cost optical digitizer based on the projection of a single grating of non-coherent light.

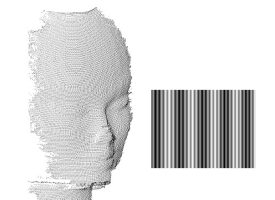

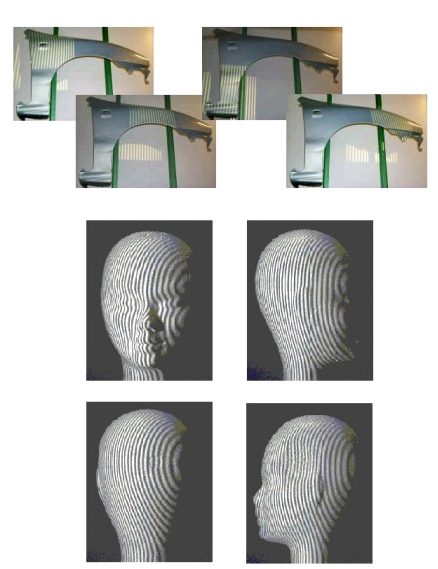

SGPS (Single Grating Phase-Shift) is a whole field profilometer based on the projection of a single pattern of Ronchi fringes: a simple slide projector can be used instead of sophysticated, very expensive devices, to match the low-cost requirement.

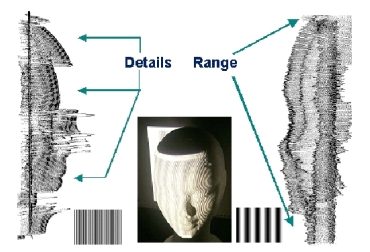

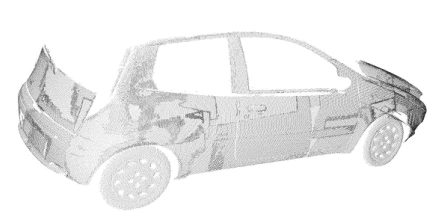

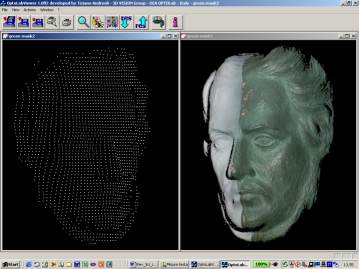

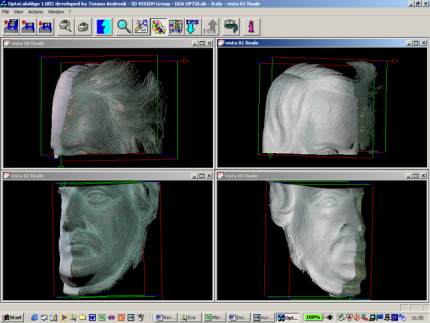

A novel approach to the phase demodulation of the fringes has been developed to obtain phase values monotonically increasing along the direction perpendicular to the fringe orientation. As a result, the optical head can be calibrated in an absolute way, very dense point clouds expressed in an absolute reference system are obtained, the system set-up is very easy, the device is portable and reconfigurable to the measurement problem, and multi-view acquisition is easily performed.