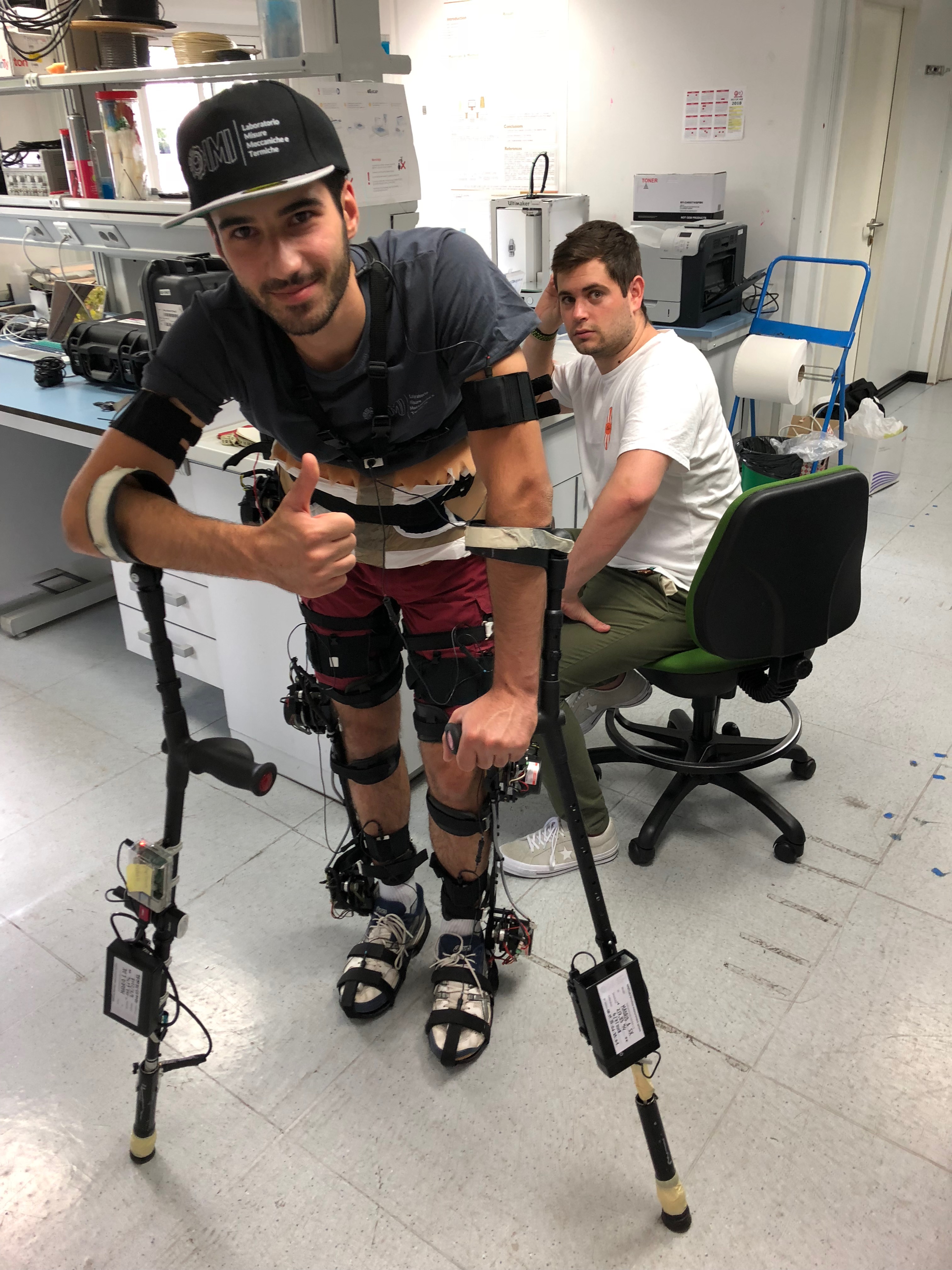

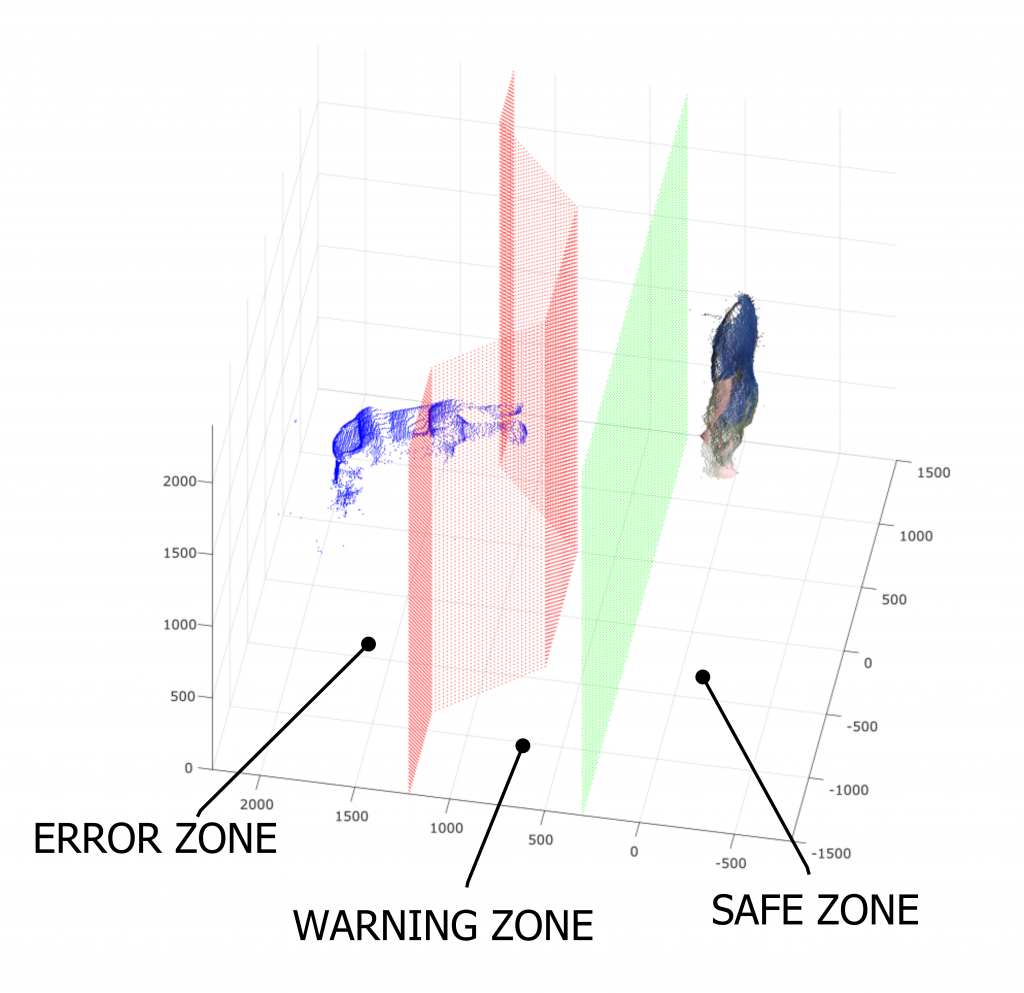

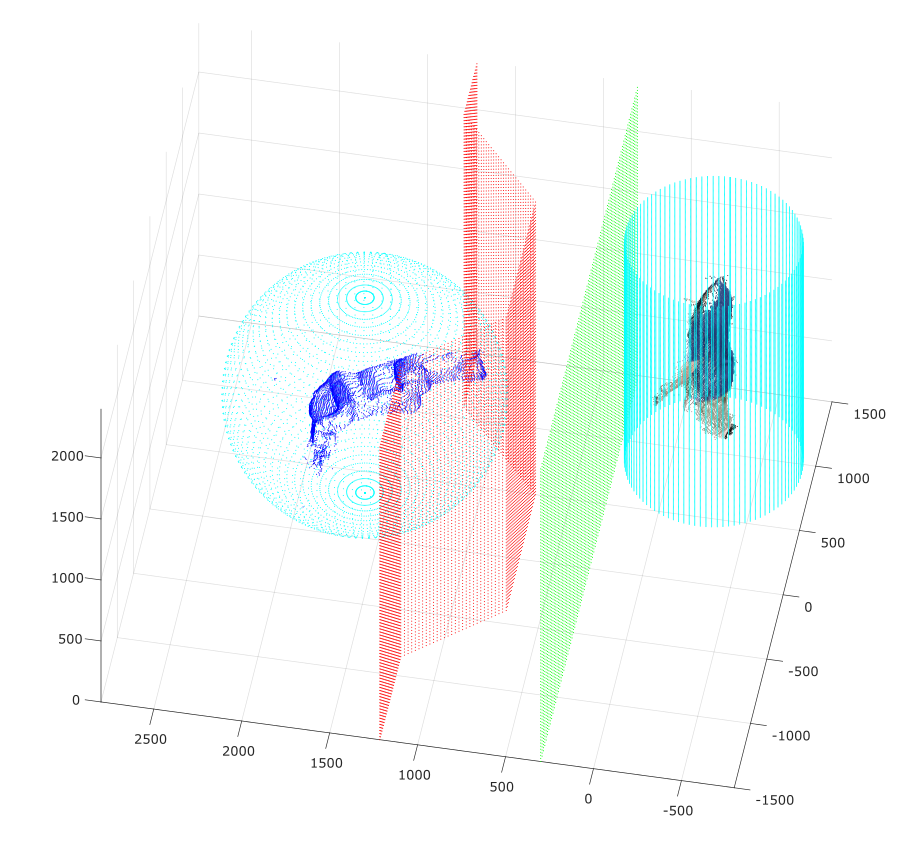

Despite the uncertain weather conditions, the TRAP-Lab and BRaIN teams had an intense day of testing. The new motion capture system, installed experimentally at the TRAP CONCAVERDE field in Lonato del Garda, allows to measure the kinematics and dynamics of the athlete, both before and during the shooting action. The exploratory test will allow to evaluate the system’s reliability and the measurements’ accuracy.

Nov, 2018